Keynotes & Trainings

Shaping technology with responsibility

– inspiration that moves

In a rapidly changing world, we need more than just innovation – we need ethical orientation and legal clarity. This is exactly where our keynotes and training sessions come in: We inspire, raise awareness and empower people and organisations to shape technology responsibly.

Whether at conferences, in companies or at public events – our presentations combine scientific depth with practical relevance and show how innovation can not only be possible, but also morally justifiable and legally compliant.

Let yourself be inspired

Below you will find a selection of our latest public lectures and training sessions. Each one provides inspiration for a better, more conscious future.

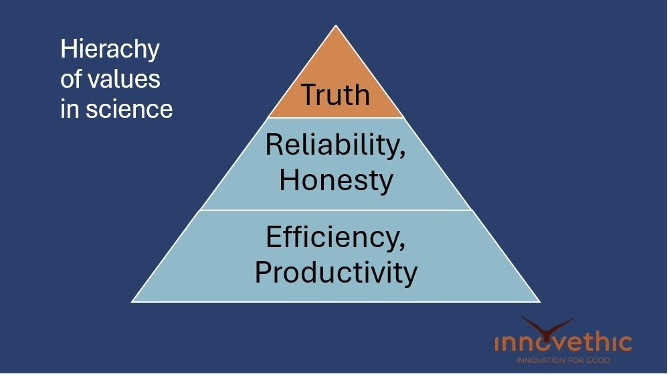

Ethical use of AI in science

asfsdaf sdfas dfasdf asfs df ajskdl fjaslkd fjasdfklasjd fkljsdafl kjasdkfjasldf jsldakf jaslkdfjskdlfj awie jlasfjksfjkasdfaf jdsklfa jsldk fjlaksdfj klasdfjskldfjskldjfoawie asdfjlksdaj flkasfjklajfd

Developing AI ethically! How does that work?

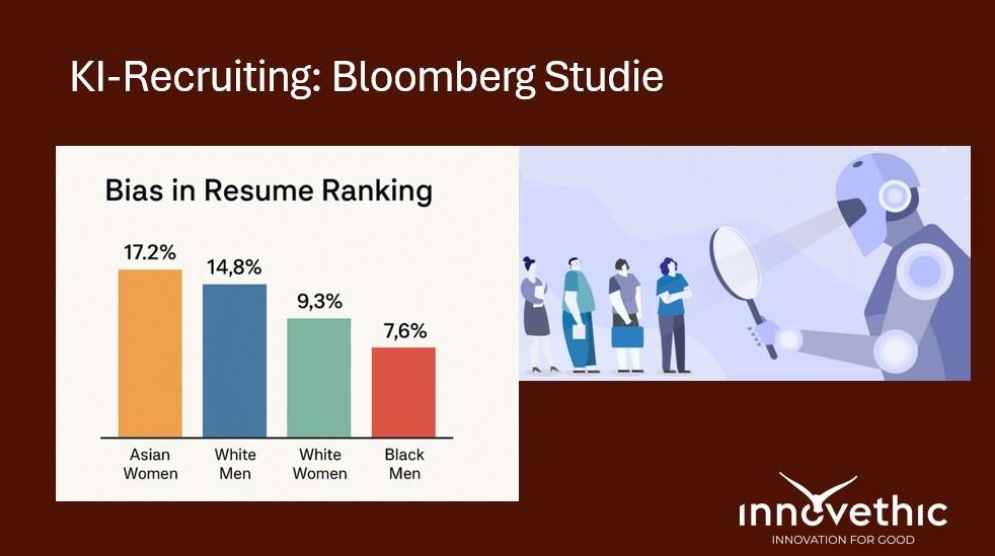

85% of the largest companies use AI in recruiting—but what if these systems reinforce biases instead of recognizing talent? This...

Human in focus – AI in medicine

AI is a powerful tool that can bring both great benefits and considerable harm, depending on how it is designed...

Balancing Innovation & Responsibility: Building Trustworthy AI for Aging

In my talk, I explored how AgeTech — technologies designed to support older adults — can be developed and deployed in...

Child protection in the Internet age

Digital services are now an integral part of children’s lives, but their protection often falls by the wayside. The British...

AI in administration: opportunities and risks

Public administration is also undergoing profound changes as a result of AI. The presentation highlighted how AI can be used...

Acting creatively: Christian responsibility in technical innovation

In his lecture, Lukas Madl emphasised that technical innovation is not incompatible with Christian faith, but can rather be a...